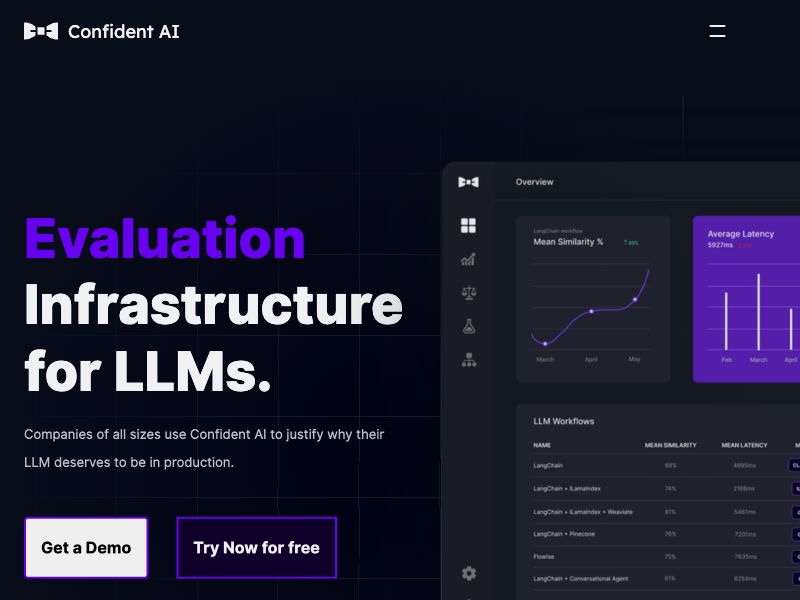

Confident AI

Open-source evaluation infrastructure for LLMs

Confident AI offers an open-source package called DeepEval that enables engineers to evaluate or "unit test" their LLM applications' outputs. Confident AI is our commercial offering and it allows you to log and share evaluation results within your org, centralize your datasets used for evaluation, debug unsatisfactory evaluation results, and run evaluations in production throughout the lifetime of your LLM application. We offer 10+ default metrics for engineers to plug and use.

More products

Find products similar to Confident AI

92

92NextSaas

The Ultimate Next.js Boilerplate for SaaS Applications

106

106Cloudbrand

White-label Dropbox alternative for digital agencies

90

90nextDirectory

The Ultimate Next.js Directory Boilerplate.

92

92NoForm AI

Capture & Qualify Leads 24/7 with AI Sales Assistant

105

105SaasCore

A directory of devtools to build your next SaaS in no time.

198

198Tggl

Scale your product risk-free